AI Breakthroughs Unleashed: OpenAI's Code Red, Apple's Clara Compression, and Real-Time Video Magic

OpenAI declares an emergency over Gemini 3 with secret "Garlic" model, Apple drops game-changing Clara compression, Microsoft kills voice lag, Alibaba streams infinite avatars, Tencent gives everyone pro video gen.

AI Breakthroughs Unleashed: OpenAI's Code Red, Apple's Clara Compression, and Real-Time Video Magic

By Allan Ali December 10, 2025

The AI world is ablaze with innovation this week, as leaks, releases, and research papers reveal a frantic push toward more efficient, real-time, and immersive technologies. OpenAI's internal scramble to counter Google's Gemini 3 dominance, Apple's revolutionary document compression framework, Microsoft's latency-busting voice model, Alibaba's infinite-streaming avatars, and Tencent's home-run video generator signal a maturation in AI—from raw compute to practical, deployable tools. These developments aren't just headlines; they're reshaping how we build and interact with intelligent systems.

This roundup is inspired by AI Revolution's latest YouTube video, "OpenAI Code Red, Apple Clara, Microsoft Vibe Voice, Alibaba Live Avatar, Tencent Hunyuan Video 1.5" (uploaded December 9, 2025), where host [AI Revolution] dissects these stories with technical depth and forward-thinking analysis. As AI's energy and compute demands skyrocket, 1Host.ing stands ready with scalable cloud hosting optimized for video generation, real-time TTS, and RAG pipelines—ensuring your deployments run smoothly without the bottlenecks.

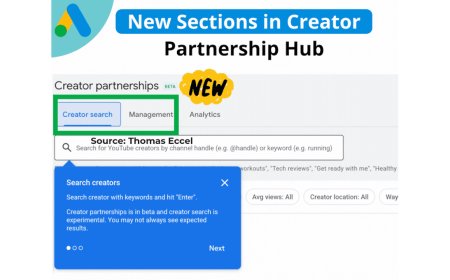

OpenAI's "Code Red": The Garlic Model Emerges Amid Gemini Panic

In a dramatic internal shake-up, OpenAI CEO Sam Altman declared a "code red" following Google's Gemini 3 ascent to the top of the LMSYS Arena leaderboard, prompting a user exodus from ChatGPT and a 6% weekly dip in engagement. According to leaks reported by The Information and Bloomberg, Altman halted non-essential projects—like ChatGPT advertising, health agents, and a personal assistant codenamed Pulse—to refocus on core improvements in speed, reliability, and personalization.

At the heart of this urgency is "Garlic," a secretive new model codenamed by OpenAI's chief research officer Mark Chen. Internal tests show Garlic outperforming Gemini 3 and Anthropic's Opus 4.5 in reasoning and coding benchmarks, achieved by overhauling pre-training to prioritize broad connections before fine-grained details—allowing more knowledge in smaller, cost-efficient models. Distinct from the earlier Shallotpeat project, Garlic could debut as GPT-5.2 or 5.5 in early 2026, with Chen pushing for "as soon as possible." This chain reaction has already accelerated OpenAI's next flagship model.

Contrast this frenzy with Anthropic's calm: CEO Dario Amodei, speaking at the New York Times DealBook Summit, emphasized enterprise focus, where Claude's code tools hit a $1B revenue run rate just six months post-launch—no code red needed. For developers racing these frontiers, 1Host.ing's GPU clusters handle intensive pre-training simulations without downtime.

| Model | Key Strength | Status |

|---|---|---|

| Garlic | Reasoning & Coding (beats Gemini 3) | Internal; Q1 2026 launch? |

| Shallotpeat | Pre-training fixes | Separate track |

| Opus 4.5 | Enterprise coding | $1B ARR |

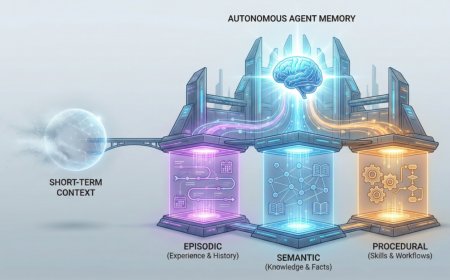

Apple's Clara: Compressing Documents 128x for Smarter RAG

Apple's machine learning team quietly unveiled CLaRa (Continuous Latent Reasoning), a game-changing Retrieval-Augmented Generation (RAG) framework that compresses entire documents into dense "memory tokens"—up to 128x smaller—while preserving semantic fidelity for faster, more accurate querying.

Traditional RAG stuffs raw text into context windows, bloating costs and latency as documents grow. CLaRa flips this: a semantic compressor (built on Mistral-7B with LoRA adapters) generates fixed-size latent vectors via QA-guided and paraphrase-supervised pretraining on 2M Wikipedia passages. Retrieval and generation happen in this unified latent space, with end-to-end training using cross-entropy for answers and MSE for alignment—no labeled relevance data needed.

Benchmarks dazzle: At 4x compression, CLaRa's F1 score hits 39.86 on Natural Questions and HotpotQA, edging LLM-Lingua 2 by 5.37 points and PISCO by over 1. In oracle setups, it reaches 66.76 F1, surpassing full-text baselines like BGE + Mistral-7B. As a reranker, it achieves 96.21% recall@5 on HotpotQA. Released in three flavors—Base, Instruct, E2E—on Hugging Face under Apple's AMLR license, CLaRa hints at deeper Apple AI integration.

For RAG-heavy apps, this means slashing token costs by 99%+ while boosting accuracy. Host your CLaRa pipelines on 1Host.ing's edge-optimized servers for sub-second queries.

CLaRa Compression Pipeline (Conceptual diagram of CLaRa's latent space unification. Source: arXiv paper.)

Microsoft Vibe Voice Realtime: Ending AI's Awkward Pauses

Tired of AI assistants that ponder before speaking? Microsoft's VibeVoice-Realtime-0.5B delivers speech in ~300ms—near-instant—via a lightweight TTS stack for "speak-while-thinking" agents.

Built on Qwen2.5-0.5B with a 7.5Hz acoustic tokenizer (sigma-VAE + 7 transformer layers, 3200x downsampling from 24kHz audio) and a 4-layer diffusion head, it totals ~1B parameters for edge deployment. Streaming text feeds directly from LLMs, generating 10 minutes of clean, long-form speech in an 8K context—ideal for conversations, not music or noise.

On LibriSpeech test-clean, it scores 2% WER and 0.695 speaker similarity, rivaling VALL-E 2 (2.4% WER, 0.643 sim) and Voicebox (1.9% WER, 0.662 sim). On SEED-TTS, it's 2.05% WER with 0.633 similarity. Open-sourced under MIT on Hugging Face, it's primed for microservices alongside LLMs.

Deploy VibeVoice on 1Host.ing for seamless, low-latency voice agents—perfect for customer support or interactive demos.

| Benchmark | VibeVoice WER | Similarity |

|---|---|---|

| LibriSpeech | 2.00% | 0.695 |

| SEED-TTS | 2.05% | 0.633 |

Alibaba's Live Avatar: Infinite Real-Time Video Avatars

Alibaba, with partners from USTC and Zhejiang University, launched Live Avatar—a 14B-parameter diffusion model generating audio-driven avatar videos at 20 FPS in real-time, streaming over 10,000 seconds without drift.

Using distribution matching distillation to condense multi-step diffusion into 4-step sampling, plus timestep-forcing pipeline parallelism across GPUs (84x speedup), it handles live mic input with synced expressions, gestures, and motion. Drift-proofing via Rolling RoPE (stable positioning), Adaptive Attention Sink (distribution alignment), and History Corrupt (noise recovery) enables sci-fi demos like dual AI agents conversing indefinitely.

On 5x H800 GPUs, it hits 20 FPS end-to-end—the first practical real-time high-fidelity avatar at scale. Code and models on GitHub under open license; integrate with Qwen for agentic chats.

For virtual assistants or metaverse apps, 1Host.ing's multi-GPU hosting powers these streaming pipelines flawlessly.

Tencent's Hunyuan Video 1.5: Hollywood-Quality Videos at Home

Tencent's HunyuanVideo 1.5, an 8.3B-parameter DiT-based model, brings pro-grade text-to-video (T2V) and image-to-video (I2V) to consumer GPUs—generating 5-10s clips at 480p/720p in ~75s on RTX 4090, upscaling to 1080p via built-in SR.

With 3D causal VAE (16x spatial, 4x temporal compression) and SSTA (selective sliding tile attention, 1.9x faster than Flash Attention 3), it excels in motion smoothness, prompt adherence, text rendering, and camera control. Human evals via GSB (Good/Same/Bad) rank it tops among open models, beating Runway Gen-3 and Luma 1.6 in VBench and T2V-Score.

Fully open-sourced (Apache 2.0) with training code, Muon optimizer, and ComfyUI/Diffusers integration—runs on 14GB VRAM min. Demos showcase cinematic shots and physics-aware scenes.

Indie creators, rejoice: 1Host.ing's affordable GPU plans make Hunyuan your backyard studio.

| Resolution | Gen Time (RTX 4090) | Steps |

|---|---|---|

| 480p | 75s | 8-12 |

| 720p | ~2x longer | 20 |

| 1080p (SR) | Post-process | N/A |

The Road Ahead: Efficiency Meets Immersion

From OpenAI's defensive sprint to Tencent's accessible video wizardry, this week's news spotlights AI's pivot to efficiency and real-time magic. Yet, as models like Garlic and Live Avatar demand massive scale, power and hosting remain key hurdles.

1Host.ing bridges that gap with turnkey cloud for video gen, TTS streaming, and RAG—tailored for innovators like you. Explore our plans and supercharge your next build.

Credit: Insights drawn from AI Revolution's video OpenAI Code Red, Apple Clara, Microsoft Vibe Voice, Alibaba Live Avatar, Tencent Hunyuan Video 1.5. Subscribe for more breakdowns—follow on X @AIRevolution.

#AI #ArtificialIntelligence #OpenAI #GarlicModel #CodeRed #AppleClara #RAG #MicrosoftAI #VibeVoice #RealTimeTTS #AlibabaAvatar #LiveAvatar #TencentAI #HunyuanVideo #VideoGeneration #AIVideo #TextToVideo #MachineLearning #TechNews #FutureOfAI #AIHosting

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0